In the world of cloud computing, stream processing has emerged as a way for handling and analyzing data in real-time, empowering organizations to derive instant insights and respond to evolving scenarios. Stream processing enables businesses to act instantly and make data-driven decisions in real-time, ensuring that they stay agile in fast changing environments. Azure Cloud offers robust tools and services for seamless data ingestion, transformation, and analysis, making it easier to implement scalable, efficient, and cost-effective real-time data solutions. This article delves into the key features, practical use cases, Azure-based resources, and best practices for stream processing, providing valuable insights into building innovative and future-ready solutions.

What is Stream Processing?

Stream processing is a data processing approach that focuses on handling data as it arrives, enabling real-time insights and actions. This approach is particularly valuable for scenarios where timing is critical, such as monitoring live events, where immediate feedback or intervention can make a significant impact. In contrast to traditional methods that process data in bulk, stream processing enables continuous and near-real-time analysis, making it ideal for dynamic and rapidly changing environments.

Common applications of stream processing include,

- Detecting fraud in real-time during financial transactions.

- Analyzing data from IoT devices for proactive maintenance or alerts.

- Monitoring social media sentiment to respond to trends as they emerge.

- Powering dynamic recommendation engines in e-commerce platforms.

Azure offers powerful tools like Azure Event Hub, Azure Stream Analytics, and Azure Databricks, which are designed to handle high throughput, low latency scenarios effectively. These resources provide the scalability, reliability, and flexibility needed to build robust stream processing pipelines for modern, data driven applications.

Azure Event Hub

Azure Event Hub is a fully managed service that enables real-time data ingestion, specifically tailored for high throughput streaming scenarios. It serves as a central hub to receive data streams from various sources, store and buffer them, while allowing various downstream services to process or analyze the data. Event Hub is an essential component for building scalable stream processing architectures, capable of handling millions of events per second, making it ideal for IoT telemetry, application logging, and real-time analytics.

Key features of Azure Event Hub include,

- High Throughput: Handles large volumes of streaming data with ease.

- Data Retention: Buffers data for a configurable retention period, enabling flexibility in processing timelines.

- Partitioning: Ensures efficient and ordered processing by splitting data into partitions.

- Integration: Seamlessly integrates with other Azure services like Azure Stream Analytics, Azure Functions, and Azure Databricks.

Event Hub Schema Registry

The Event Hub Schema Registry is a centralized repository for managing and storing schemas that define the structure of data ingested through Event Hub. It plays a very important role in ensuring consistency and compatibility of data across producers and consumers of streaming data. When working with real-time data pipelines, especially over long durations, the structure or schema of the data can evolve. Such situations are called as schema drifts. The presence of any added field to IoT telemetry payload data or a data type change may be critical changes occurring in the message set of a financial transaction log, for instance. Without a schema registry, those might also lead to problems in processing errors, data mismatches, or pipeline failures, making it difficult to maintain robust streaming applications.

The Schema Registry simplifies this challenge by enabling schema validation, evolution, and retrieval. It ensures that producers and consumers adhere to agreed-upon schemas, allowing for seamless updates without breaking existing systems. For instance, a producer can register a new schema version while consumers can adapt at their own pace, ensuring backward and forward compatibility. This capability is vital for long-running streaming scenarios, where data structures are likely to change over time. With support for formats like Avro, JSON, and CSV, and integration with other Azure services, the Schema Registry provides a scalable solution for managing data quality and enabling agile development in real-time data pipelines.

Azure Stream Analytics

Azure Stream Analytics is a fully managed stream processing service designed to move, transform, and analyze data in real time as it flows between various data inputs and outputs. It provides a simple yet powerful query language to process streaming data, enabling extracting insights, performing aggregations, and driving real-time decisions.

Key Components of Azure Stream Analytics include,

- Input

Azure Stream Analytics supports various input sources for ingesting data. These include,

- Azure Event Hub: Ideal for high throughput, real-time event streaming.

- Azure IoT Hub: Specialized for ingesting data from IoT devices.

- Azure Blob Storage or Azure Data Lake Storage Gen 2: Useful for processing historical or batch data alongside real-time streams.

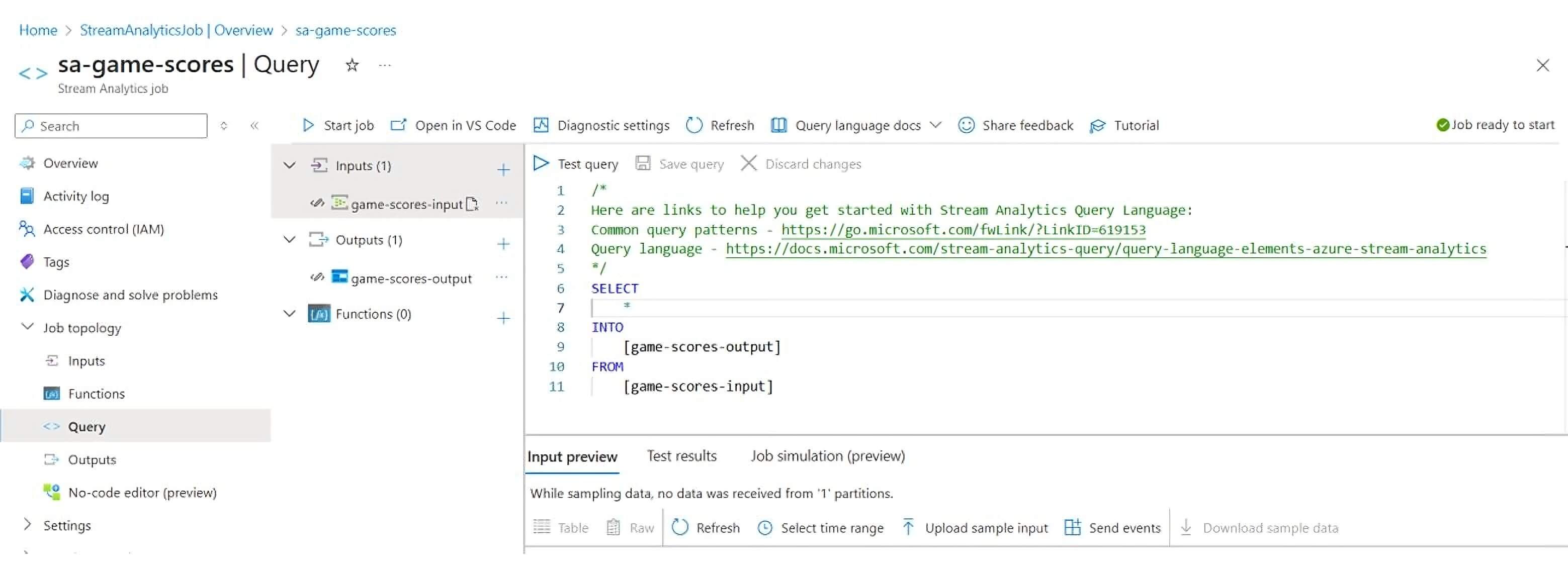

- Query

The service uses a SQL-like query language to define transformations, filtering, and aggregations on the input data. For example,

SELECT *

INTO <output-name>

FROM <input-name>

WHERE <condition>

The query can include advanced features like windowing functions for performing time-based aggregations, enabling scenarios like monitoring moving averages or detecting trends.

- Output

After processing, Azure Stream Analytics can send the results to various output destinations, such as,

- Azure SQL Database: For structured storage and further querying.

- Azure Blob Storage or Azure Data Lake: For archival or batch processing.

- Cosmos DB: For low latency, globally distributed NoSQL storage and the only Stream Analytics output that supports upsert operation.

- Power BI: For real-time data visualization and dashboards.

- Other services and systems via custom integrations.

Partitioning

One of the key features of Azure Stream Analytics is its partitioning capability, which allows the distribution of data streams across multiple partitions for parallel processing. Partitioning ensures scalability and efficiency when handling high throughput data streams, enabling faster processing and optimized resource utilization. By aligning partitions with data distribution, such as device IDs or geographic regions, it supports granular control and enhances the performance of complex stream processing pipelines. This makes Azure Stream Analytics ideal for scenarios requiring real-time insights at scale.

Window Aggregations

Window aggregations in Azure Stream Analytics enable time-based operations on streaming data by grouping events into defined intervals for analysis. These aggregations are essential for scenarios where insights depend on temporal patterns, such as calculating averages, sums, or counts over a period. For instance, they are commonly used to monitor metrics like the number of transactions in the past minute or the moving average temperature of IoT devices.

There are five types of windows available including tumbling windows for fixed, non-overlapping intervals, hopping windows for intervals that overlap by a specific time span, sliding windows for dynamic intervals based on event triggers, session windows for grouping events based on periods of activity separated by inactivity, and snapshot windows for analyzing data at specific points in time. These windows are essential for tasks like calculating rolling averages, detecting trends, and identifying anomalies in real-time data streams.

Databricks

Azure Databricks is a powerful analytics platform designed for big data and AI, optimized for the Microsoft Azure cloud. Built on Apache Spark, it offers a unified platform for processing large scale data, making it especially valuable for stream data processing scenarios. Databricks provides the ability to ingest, process, and analyze streaming data in real time, enabling organizations to gain immediate insights and take prompt actions. Its seamless integration with Azure services, such as Azure Event Hub and Azure Stream Analytics, makes it a robust choice for building scalable, low latency stream processing pipelines.

With its advanced stream processing capabilities, Azure Databricks can handle high throughput data streams, perform complex transformations, and execute machine learning models on incoming data. It supports structured streaming, allowing users to define incremental processing logic over streaming data with the same syntax used for batch operations. This makes it ideal for use cases like fraud detection, IoT data monitoring, and personalized recommendations. Additionally, its collaborative workspace and support for multiple languages, including Python, SQL, and Scala, enhance productivity for teams working on real-time data processing workflows.

Creating a Simple Stream Processing Pipeline

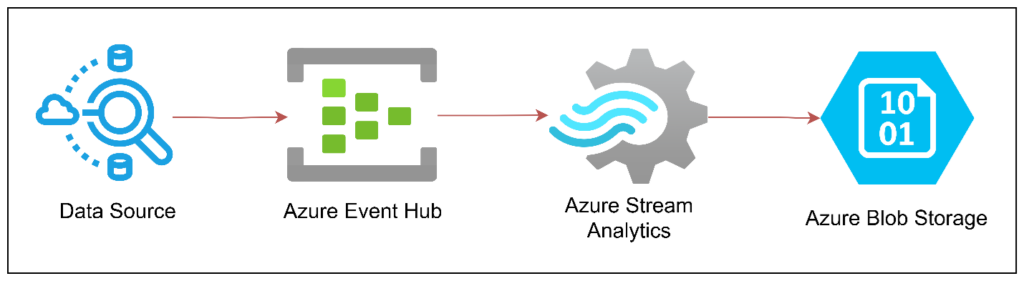

Here are the steps to create the stream processing solution shown in the diagram using Azure Stream Analytics.

- Identify the source data that will be sent as input to the pipeline. This could be simulated data or data collected from real-world sources like IoT devices or logs. Ensure the data format matches the input expectations (e.g., JSON, CSV).

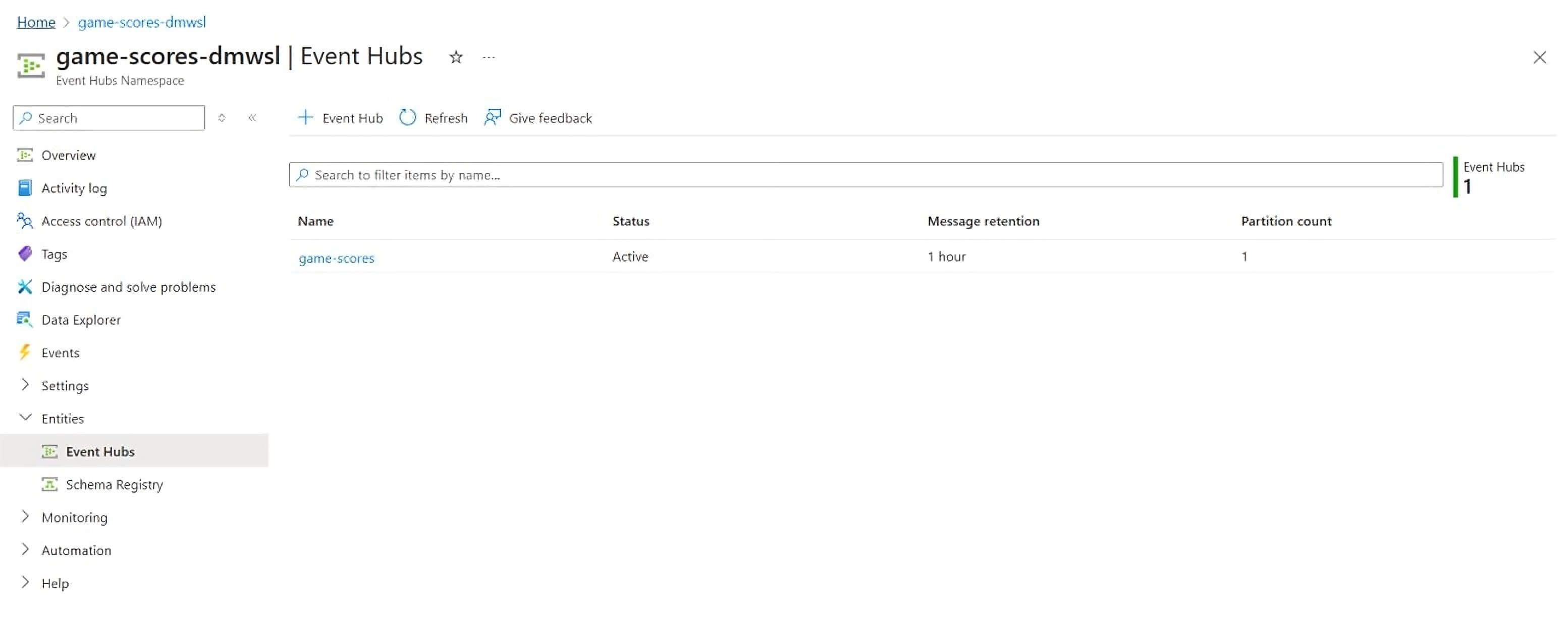

- Create Event Hub Namespace and inside that, create an event hub. Configure the Event Hub to act as the input source for the streaming data.

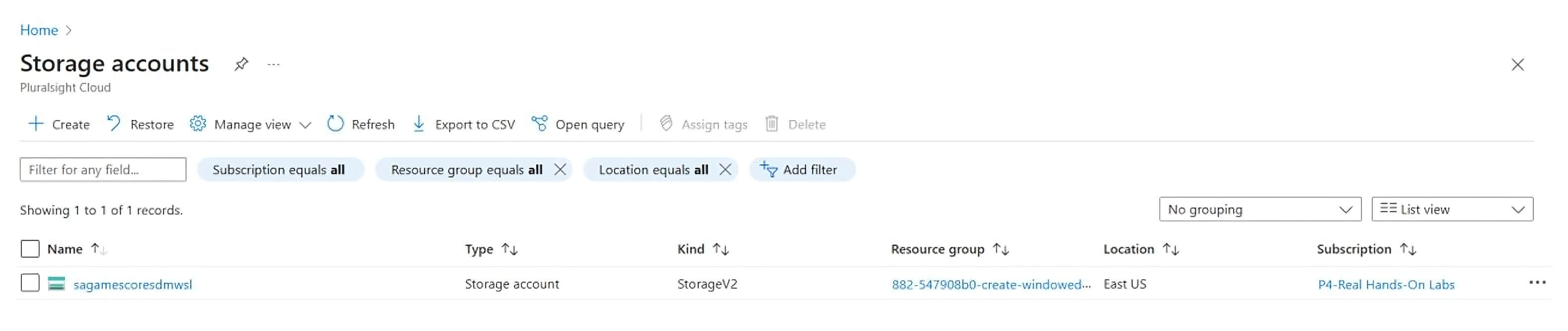

- Navigate to the Azure Portal and create Azure Blob Storage account.

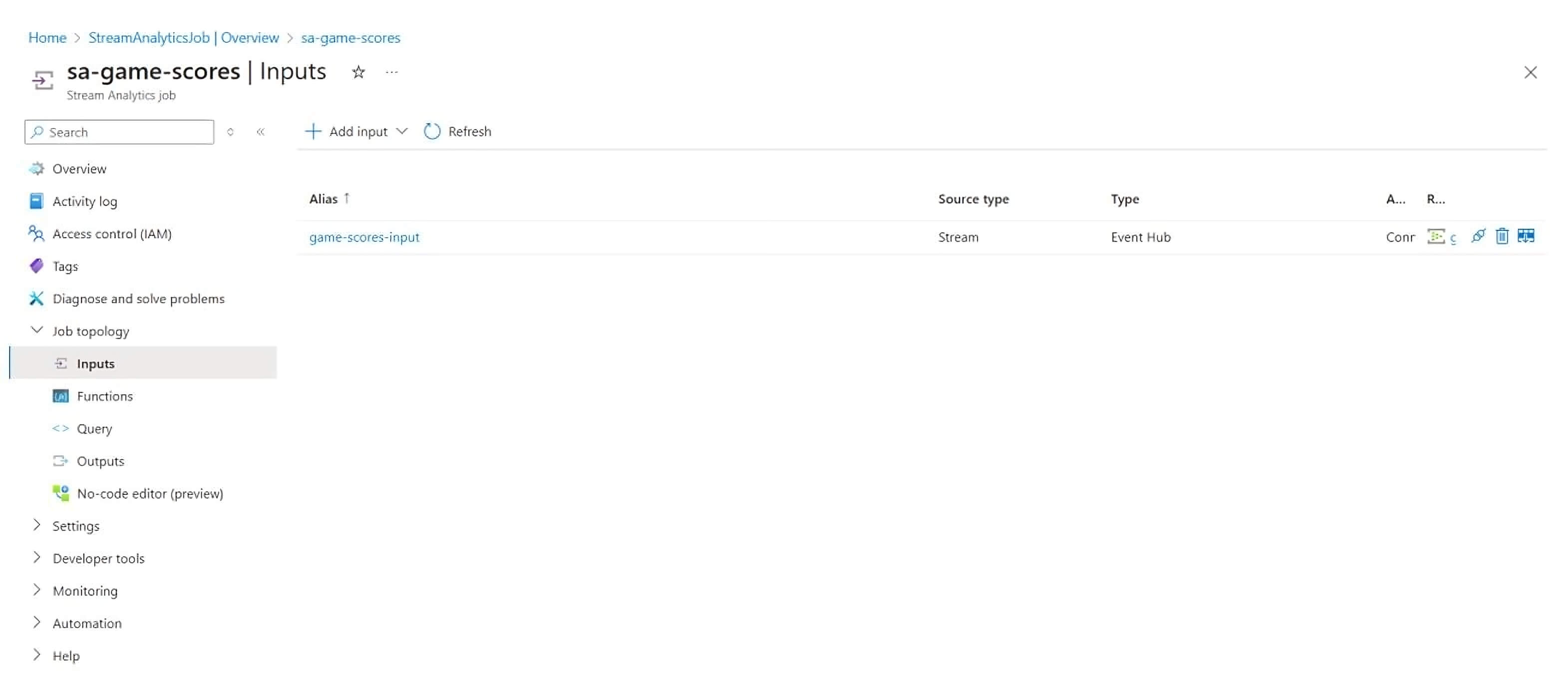

- Create Azure Stream Analytics job and add the created event hub as the input for the stream analytics job.

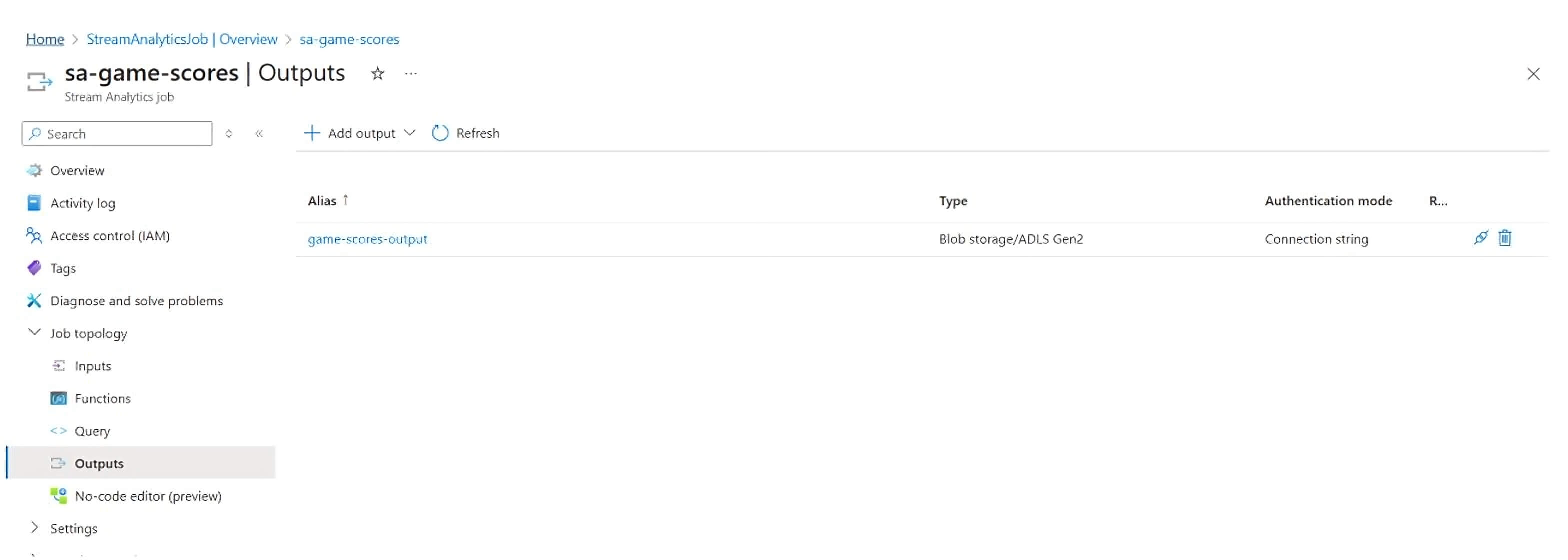

Add blob storage as the output for the stream analytics job. Can use an existing container or create a new container itself.

Azure provides the select query as an example.

- After configuring the inputs, query, and outputs, start the Stream Analytics Job. Monitor the job’s performance and ensure data is being ingested, processed, and stored as expected.

- Navigate to the Azure Blob Storage account and verify that the processed data is being saved in the specified container.

This setup efficiently ingests, processes, and stores streaming data, providing a foundation for real-time analytics and actionable insights.

Best Practices

- Choose streaming only when necessary

Use stream data processing for scenarios where real-time insights and actions are essential, such as monitoring IoT devices, fraud detection, or dynamic recommendations. For non-time-critical workloads, consider batch processing to optimize resources. - Process only necessary fields

During implementation, select only the required fields for processing. Filtering out unnecessary data reduces computational overhead and improves efficiency. - Leverage checkpoints and watermarking

Utilize checkpoints in Event Hub to ensure data processing resumes from the last processed event during disruptions. Implement watermarking in Stream Analytics to track up to which point data is considered clean and processed, enabling better handling of delays or gaps in the data stream. - Handle late-arriving data

Introduce late data tolerance in Azure Stream Analytics to account for delays in event arrival. This ensures that late events are still processed within the specified tolerance window and included in the final results. - Implement event replication patterns

Use event replication in Event Hub to duplicate events across partitions or regions. This approach helps manage interruptions, ensures data durability, and achieves higher availability for critical streaming applications. - Configure exception handling

Design robust exception handling mechanisms to identify and manage data processing errors. Configuring alerts and fallback strategies can prevent data loss and maintain the reliability of the stream processing pipeline.

Conclusion

Stream processing has become a pivotal approach in managing and analyzing data in real time, offering organizations the ability to act quickly and make informed decisions as events occur. By enabling continuous data flow and instant transformations, stream processing addresses the demands of modern, data-driven environments where agility and responsiveness are essential. As businesses handle rising volumes of real-time data, implementing a scalable and efficient stream processing architecture becomes increasingly valuable for staying competitive. Azure’s ecosystem offers the tools and infrastructure needed to leverage stream processing, enabling organizations to create innovative and future-proof solutions. Embracing this approach unlocks new opportunities for innovation and competitive advantage in a constantly changing digital landscape.

By

By